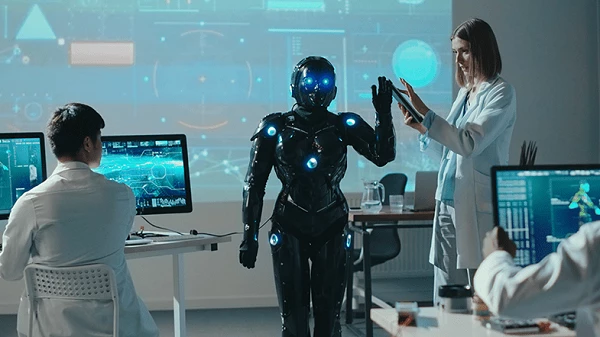

A third of scientists working on AI say it could cause global disaster

Only need 10 ants to create a stable raft, according to scientists / Maximizing could be key to minimizing our environmental footprint

More than one-third of artificial intelligence researchers around the world agree that AI decisions could cause a catastrophe as bad as all-out nuclear war in this century. The findings come from a survey covering the opinions of 327 researchers who had recently co-authored papers on AI research in natural language processing. In recent years, there have been big advances in this area, with the increased use of large language AI models that display impressive capabilities, including writing computer code and creating novel images based on text prompts.

The survey, carried out by Julian Michael at the New York University Center for Data Science and his colleagues, revealed that 36 per cent of all respondents thought nuclear-level catastrophe was possible. “If it was actually an all-out nuclear war that AI contributed to, there are plausible scenarios that could get you there,” says Paul Scharre at the Center for a New American Security, a think tank based in Washington DC. “But it would also require people to do some dangerous things with military uses of AI technology.”

A third of scientists working on AI say it could cause global disaster.

US military officials have expressed scepticism about arming drones with nuclear weapons, let alone giving AI a major role in nuclear command-and-control systems. But Russia is reportedly developing a drone torpedo with autonomous capabilities that could deliver a nuclear strike against coastal cities.

Fears about the possibility of nuclear-level catastrophe were even greater when looking specifically at responses from women and people who said they belonged to an underrepresented minority group in AI research: 46 per cent of women saw this as possible, and 53 per cent of people in minority groups agreed with the premise.

This even greater pessimism about our ability to manage dangerous future technology may reflect the “present-day track record of disproportionate harms to these groups”, the authors write. The survey may even underestimate how many researchers believe AI poses serious risks. Some survey respondents said they would have agreed that AI poses serious risks in a less extreme scenario than an all-out nuclear war catastrophe.

“Concerns brought up in other parts of the survey feedback include the impacts of large-scale automation, mass surveillance, or AI-guided weapons,” says Michael. “But it’s hard to say if these were the dominant concerns when it came to the question about catastrophic risk.”

Separately, 57 per cent of all survey respondents saw developments in large AI models as “significant steps toward the development of artificial general intelligence”. That evokes the idea of an AI with intellectual capabilities equalling those of humans. Another 73 per cent agreed that AI automation of labour could lead to revolutionary societal changes on the scale of the industrial revolution.

Given that researchers expect significant advances in AI capabilities, it is somewhat heartening that just 36 per cent see a catastrophic risk from AI as being plausible, says Scharre. But he cautioned that it is important to pay attention to any risks related to AI that can impact large swathes of society.

“I’m much more concerned about AI risk that seems less catastrophic than all-out nuclear war but is probably likely, because of the challenges in dealing with the systems as we integrate them into different industries and military operations,” says Scharre.

Reference: arxiv.org/abs/2208.12852

End of content

Không có tin nào tiếp theo